Celebrating OWL interoperability and spec quality

In a Standards and Pseudo Standards item in July, Holger Knublauch gripes that SQL interoperability is still tricky after all these years, and UML is still shaking out bugs, while RDF and OWL are really solid. I hope GRDDL and SPARQL will get there soon too.

At the OWL: Experiences and Directions workshop in Athens today, as the community gathered to talk about problems they see with OWL and what they'd like to add to OWL, I felt compelled to point out (using a few slides) that:

- XML interoperability is quite good and tools are pretty much ubiquitous, but don't forget the XML Core working group has fixed over 100 errata in the specifications since they were originally adopted in 1998.

- HTML interoperability is a black art; the specification is only a small part of what you need to know to build interoperable tools.

- XML Schema interoperability is improving, but interoperability problem reports are still fairly common, and it's not always clear from the spec which tool is right when they disagree.

And while the OWL errata do include a repeated sentence and a missing word, there have been no substantive problems reported in the normative specifications.

How did we do that? The OWL deliverables include:

- Rigorous normative specification using mathematical logic

- based on mature research results

- Overview, Guide, Reference, also part of the standard

- Note translations in French, Hungarian, Japanese contributed by the community.

- 100s of tests developed concurrent with the spec

- demonstrating each feature

- capturing dozens of issues

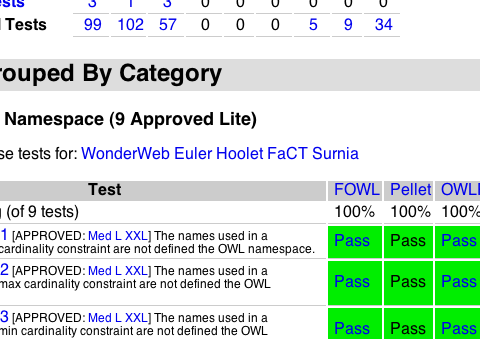

Jeremy and Jos did great work on the tests. And Sandro's approach to getting test results back from the tool developers was particularly inspired. He asked them to publish their test results as RDF data in the web. Then he provided immediate feedback in the form of an aggregate report that included updates live. After our table of test results had columns from one or two tools, several other developers came out of the woodwork and said "here are my results too." Before long we had results from a dozen or so tools and our implementation report was compelling.

The GRDDL tests are coming along nicely; Chime's message on implementation and testing shows that the spec is quite straightforward to implement, and he updated the test harness so that we should be able to support Evaluation and Report Language (EARL) soon.

SPARQL looks a bit more challenging, but I hope we start to get some solid reports from developers about the SPARQL test collection soon too.

Can I receive updates when the SPARQL test collection changes? I’m still working on new questions and updated answers for my SPARQL FAQ, and I intend to continue updating it regularly as SPARQL matures and is used more widely. Is there a feed that he could use to keep up with the latest.

It seem the actual Feed readers/aggregators should be able to autodiscover the feed from any SPARQL FAQ page itself, but in case that fails, here’s an Atom 1.0 feed of new questions/answers to the FAQ. As many aggregators won’t currently re-show items with an updated atom:updated timestamp. I hope to solve this option soon.

Preston