Making video pages for the W3C AC meeting

Most of the preparation for the May meeting of W3C's Advisory Committee was already done when it became clear that travel was not going to be possible, due to COVID-19. It had to be a ‘virtual’ meeting. But because you cannot ask people to be in a video conference eight hours a day, the usual presentations were replaced by recorded videos that the participants could view in their own time before the meeting.

Prerecorded video talks

As every year, W3C had planned a meeting of its Advisory Committee in the spring of 2020. It was going to be on 18 and 19 May, in Seoul, South Korea. Most of the preparation was already done when at the beginning of March it became clear that travel was not going to be possible, due to COVID-19. The decision was made to hold a ‘virtual’ meeting, i.e., a meeting by video conference.

You cannot ask people to be in a video conference eight hours a day for two days, and so the format was changed to two discussion sessions of an hour and a half, and the usual presentations replaced by recorded videos. The participants could view the videos at their leisure during the preceding week.

We asked the presenters to film themselves or record just their audio. We also asked them to film themselves only as a ‘talking head’ and rely on slides for all visuals. Those slides had to be in HTML and meet our accessibility requirements. (As for all such meetings, we already had prepared the graphical style for the slides earlier and provided the speakers with a template; we just had to remove the word ‘Seoul’ from it.) This ensured a homogeneous set of talks with maximum accessibility, even for people who watched the presentation on a small screen, where the video had to be reduced in size.

To make the videos even easier to follow, we also made transcripts, closed captions, and even subtitles in three languages (Korean, Japanese and Simplified Chinese). And we developed HTML pages to serve as the user interface to the videos.

We did not create translated transcripts, for two reasons: We think that the people who make up the audience, although they may have difficulty understanding some English speakers, can all read English pretty well; and, secondly, it would have required more people to do the editing.

Indeed, several people expressed their appreciation of the translated subtitles, but nobody mentioned missing translated transcripts.

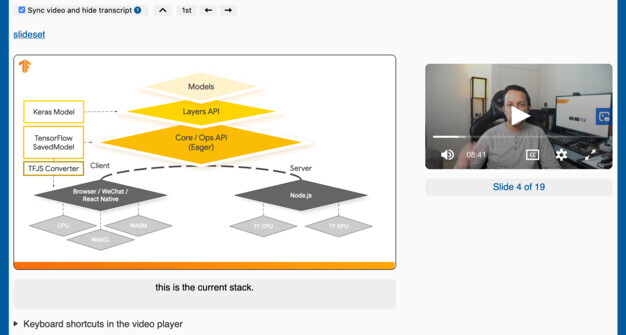

(Reduced) screenshot of a medium-size browser window with the slides and the transcript on the left and a small video on the right.

The HTML pages allow different ways to watch the talks: read the slides and the video transcript, play the video in a corner of the screen while reading the slides and the transcript, or watch a synchronized slide show and video side by side (‘kiosk mode’). The video can display captions or subtitles. When watching the slide show, the captions or subtitles are shown under the slides, because the subtitles in the video can be quite small and because we expect that people have their eyes more on the slides than on the video. By default, the page shows the English captions, but the user can select the other languages, or turn the captions off.

(Reduced) screenshot of a maximized browser window, showing ‘kiosk mode‘: one slide on the left, the video on the right and subtitles (here in Korean) under the slides.

One presentation, the opening remarks by the Director, did not have slides. In this case we showed the video where otherwise the slides would be.

To make the captions and the subtitles, we hired the services of a specialized company. That gave us captions in the WebVTT format with good timing, although the text did need some editing. Many speakers in our videos were not native speakers of English and several talks were technical in nature, which is probably the reason why some words were not correctly transcribed and some punctuation was wrong. The English captions needed a little editing, the translations needed more.

The first talk available (slides and video) was the one by my colleague Coralie Mercier. She made a video in which she spoke slowly and clearly, and announced each slide explicitly. That video, and its set of captions, was a great help when developing the technology to make the transcripts and the HTML pages for each talk.

A team effort

Reviewing and editing the captions was done in part by the speakers themselves, and in part by the W3C team. For the English captions, that posed few problems: Many people in the team could contribute and many also had enough technical skills to edit the caption files directly. (All the captions were stored in the repository that serves our web site.) The number of people who could review and edit the translated subtitles was obviously much smaller, especially for the more technical talks.

There were some minor hiccups when people used an HTML editing tool to edit the captions. We use a particular HTML WYSIWYG editor often because it allows us to update our web site through HTTP PUT. However we had no such tool for editing WebVTT files. The HTML editor worked but required us to remove some extraneous HTML headers after editing.

There were 19 talks, varying in length from 4 to 29 minutes, totaling just over 4 hours. Some people who reviewed the translated subtitles reported that they spent 12 hours reviewing and correcting them. Maybe hiring a different agency, one that has more experience with technical subjects, can reduce that time. Or we could prepare a glossary in advance with technical words the speakers are likely to use.

The video transcripts were made from the English captions. One of my colleagues, Dominique Hazaël-Massieux, used a small script (in JavaScript) to concatenate the captions from a WebVTT file, add some HTML markup, and save the result. He also added some markup by hand to mark the places where the speaker switched to a new slide. Most of the speakers were careful to announce each slide and even if they forgot one, it was usually pretty clear what slide they were on.

The manual markup consisted just of starting a new <div> element at each slide boundary. That was enough to match each part of the transcript to the corresponding slide when the HTML page for each talk was generated.

Project management

We did not use a project management tool to synchronize the work. It is something we may look into the next time, although we know it is hard to find something that everybody likes.

Instead, we used a variety of tools, according to people's preferences, but none very intensively: GitHub issues, a wiki, CVS, email… Occasionally we chatted during existing meetings when there happened to be two or three people interested in the videos. The only deadline set in advance was the date when all presentations would be published.

The whole process took six weeks, from creating the agenda and inviting the speakers to publishing the video pages, and ended on May 10, as hoped.

Hosting the videos

The videos were used pretty much as the speakers delivered them, as a single take. We did very little editing.

Six speakers chose to record only audio, thirteen made a video. Most people used their laptop to record themselves, some used a camera setup in their office.

To keep the user interface the same for the talks with only audio, another colleague of mine, Ralph Swick, converted the six audio files to videos by combining the audio with a static image. We needed to do that, because we used a particular video player and there was no audio player with the same UI.

Indeed, WebCastor, already a sponsor of two W3C TPAC meetings, offered to host the videos on their StreamFizz platform. That saved us from storing the videos locally and made them available via their CDN and in multiple resolutions.

Unfortunately, it also meant that we had to load all captions into the video page twice. The captions inside the video are not accessible to the script of the page, because of the ‘same-origin policy‘. (Our script loaded the second set of captions with the XmlHttpRequest() function in JavaScript and parsed them with Anne van Kesteren's a WebVTT parser.)

The StreamFizz video player provides relatively coarse synchronization (via ‘timeupdate’ events). Slides and captions may start or end up to half a second too early or too late.

The player does not provide a link to download the video and watch it offline and we did not provide one either.

Accessibility and the default user interface

A page that is combined by a program from various pieces made by different people who didn't see the final result, and which on top of that has scripts that modify the contents in reaction to user actions, has a high risk of not being very usable. We therefore asked the accessibility experts in our team for feedback early on. Several gave helpful comments, but special mention goes to Josh O'Connor and Daniel Montalvo, who wrote an accessibility review and helped choose the correct ARIA attributes to use.

We found out that the help text inside the video player was not accessible. The problem was that the description of each key and the key itself were visually, but not structurally separated. Assistive technology didn't pronounce the key as a separate letter. We passed on that feedback to WebCastor and made our own help text.

There appeared to be a difference of opinion between people relying on the visuals and those relying on assistive technology, with respect to one aspect of the pages:

Everybody liked the ‘kiosk’ mode (the automatically advancing slides with subtitles as the video played), but people getting to the pages for the first time had trouble realizing that the mode existed and then had trouble finding how to activate it. We tried different wordings for the button, but then, on the advise of the ‘visual’ people, just made kiosk mode the default. There was still a button, but it served now to exit from kiosk mode.

However, that led to complaints, because people relying on assistive technology now found themselves on a page that appeared to be incomplete, with only one slide and no transcript. They had trouble understanding that the button would reveal the other slides and the transcript.

So, in the end, we went back to the transcript mode as the default, with a button to enter kiosk mode. We also added a help page with a longer explanation of the button and we made it so that, if people clicked the link to the next talk while in kiosk mode, that next talk would start in kiosk mode.

What also helped was to make sure the video was always below the button that started kiosk mode. At first, the video was a fixed element on the page. It didn't scroll with the page and was visible all the time. But then we made it a ‘sticky’ element instead (with the CSS property ‘position: sticky’) and it was only visible while the slides and the transcript were also visible. People thus had to scroll down to see the video and saw the button before they saw the video.

It seems most people in the end found the kiosk mode, though we will try different labels for the button again next time.

Slide template

For every AC meeting, we prepare slide templates with the specific style for that meeting. In the past we often provided them in different formats: HTML, of course, but also Powerpoint and Keynote. This time we only made HTML, which allowed the slides to be easily integrated into the video pages.

The few speakers who didn't feel comfortable with HTML provided the text of their slides in some other format and team members converted it to HTML.

Our recent slides rely on the Shower script (or the b6+ script, which can work with the same format) during presentations. That script requires slides to be elements with a class attribute of ‘slide’, and elements on a slide that are displayed incrementally to have a class of ‘next’. The process that built the video pages looked for that markup to split up the slides, insert the video transcripts in the right places, and synchronize the slides with the video.

The process also relied on the fact that the slide style makes slides that have a fixed aspect ratio of 16:9. That allowed to visually align the slides and the video (also 16:9) and scale them for different screen sizes.

We will likely use the Shower slide format again for the next AC meeting and only replace the style sheet. But some investigations are underway to see if the process can be extended to accept other slide formats, even non-HTML formats.

Screenshot of a video page for the ML workshop with slides in PDF. Dominique succeeded in embedding a PDF viewer. It currently requires a very recent browser.

Standalone slides – an oversight

Originally, it was foreseen that the agenda of the meeting would have links not only to the video pages, but also to the standalone slides, so people could view them without the video (and possibly use them elsewhere in a presentation). However, the agenda ended up with just one such link, because we forgot to add the others.

As some people asked for links to the slides, we will make sure they are there for the next meeting.

CSS challenges

The video pages use the same style sheet as other AC and TPAC web pages (the ‘TPAC style’). That style determines the look of the heading, links, menus, etc. But the pages also include slides, which have their own style sheet (the ‘slide style’). Combining both on the same page obviously leads to conflicts.

Luckily, each slide is an element with a class attribute containing ‘slide’ and most style rules for slides already had ‘.slide’ in the CSS selector. Adding ‘.slide’ to the rules that didn't removed all style conflicts for elements outside the slides.

But some style rules of the TPAC style affected the slides. To remove those conflicts, some rules had to be added to the slide style to undo the effect of the TPAC style. Luckily, there were only a few of those.

Some of the presenters needed extra style rules for their slides. We asked them to put those rules inside a <style> element at the top of their slides file, so it was easy for the program that built the video pages to copy them. To make sure those rules only affected the slides and not any other elements on the video pages, the program parsed the style rules (in a rudimentary way) and prefixed them with an ID selector.

The discussion sessions

A typical AC meeting has presentations interleaved with discussions and a final discussion session at the end to round up the meeting. And, of course, it has coffee breaks, lunches, a dinner and pre-dinner drinks.

In this case the presentations were available on video a week before the meeting itself and the meeting consisted of discussion sessions in two 90-minute teleconferences. We also opened the teleconference early (half an hour) for people to chat informally and test their video, and left it open for more informal chat after the meeting. That doesn't replace the informal interaction at a meeting, but it helps.

It seems the participants did indeed watch all or most of the videos before the meeting. They also rated them highly. They liked the live discussion sessions as well and rated them almost as highly.

Some people reported that they skipped a few of the videos, but read the transcripts instead.

We had more participants than for physical meetings in the past, some 30%. But not as much as we hoped, given that we tried to make participation easy and cheap (no travel!).

There were also fewer questions than usual. The discussions ended by themselves slightly before the end of each 90-minute session. Maybe being able to study the presentations ahead of time removes the need for some questions. Maybe being at home watching a video removes the peer pressure of seeing people around you getting up to ask questions. We did try to make it as easy as possible to queue a question, either via the in-video chat or via IRC.

Improvements in version two

The next AC meeting will be in the fall and it will again be a ‘virtual’ one. That means we have a chance to do a ‘version 2’. There will again be prerecorded videos instead of plenary talks and discussion sessions via video-conferencing.

The sections above mentioned some ways in which we could improve the video pages and the development process. We heard other suggested improvements, including the following:

The kiosk mode (automatically advancing slides as the video plays) requires JavaScript, but the default mode (static slides and transcript, with the video on the side) could be fully functional without. That requires, however, an HTML5 <video> element with a ‘controls’ attribute rather than a video player completely controlled by JavaScript, as we used in version 1. The extra buttons that are only used in kiosk mode (advance a slide, start over, etc.) do not need to be in the static page, as they can be added by the script.

The kiosk mode could be much leaner and only show the current slide, the video, the buttons to navigate the slides, buttons to jump to the next/previous talk and a button to exit kiosk mode. It could omit the page header, footer and other elements usually found on web pages, and especially the scroll bar.

In kiosk mode, the slides and the video could use the full width of the window, even if that makes the slides and the video bigger than their natural size. That allows watching the talk on a big TV screen while sitting at a distance. (One simple way to do that would be to increase the font size of the page to something like 1.15vw through CSS.).

We again want to put captions and translated subtitles under the slides. But if we can use an HTML5 video element in the page itself, rather than a video player in an iframe on a different domain, the script in the page could use the captions in the <track> elements. Without the need to load and parse the WebVTT files twice loading would be faster. Less code probably also means fewer bugs.

In version 1, when the video is playing, the number of the current slide is indicated under the video and you can click on it to jump to the corresponding slide and transcript. It would also be nice, when reading the transcript, to be able to (re)start the video at the precise position of the slide and transcript you are currently reading. A simple button below the slide could do that. (This idea is due to Ralph Swick.)

The talks are typically short (around 15 minutes) and don't have many slides. But, for the longer ones, especially those that treat two or more distinct subjects, a table of contents at the top might be useful.

An improvement could also be to use an <audio> element for audio-only talks, instead of a video with a static image. An audio file is probably not significantly smaller than a video file with a single static image, but using <audio> avoids showing an image that contributes no information.

With more time to prepare, we should also be able to publish the videos two weeks before the meeting, instead of one.

For the discussion sessions, we had live interpreters for three languages. However, it might be feasible to have live subtitles in those languages instead. People would then be able to still hear the speakers and their tone of voice. (This idea was proposed by Naomi Yoshizawa.)

Source code

The code we used to make the video pages is available on GitHub. It is a mix of PHP and JavaScript. The PHP code is slightly simplified from our original: We removed the links to most of the videos (many of which aren't public) and removed the header and footer elements from the generated HTML pages to make the code shorter. (The header and footer contained links to other pages on our site and are not specific to the video pages.)

After the meeting ended we ‘froze’ the pages. I.e., the pages that were previously generated on the fly by the PHP code are now static HTML pages.

![[screenshot]](https://www.w3.org/cms-uploads/imported-assets/blog/screenshot-transcript.jpg)

![[screenshot]](https://www.w3.org/cms-uploads/imported-assets/blog/screenshot-kiosk.jpg)

Is there any video tutorial? so that I can leant very quickly. Thank you for sharing.